Ensuring efficient learning and fast convergence when training deep learning models can be a challenge. This is where normalization techniques come into play. Layer Normalization and Batch Normalization are two of the most popular methods used to stabilize training and improve neural network performance. While both aim to address the internal covariate shift and make training more efficient, they differ in how they apply normalization.

In this article, we'll discuss the distinction between Layer Normalization and Batch Normalization, how they operate, and under what circumstances one would be more useful than the other for deep learning applications.

The Basics of Normalization in Machine Learning

Before discussing Layer Normalization and Batch Normalization, it's critical to understand why normalization methods are applied in machine learning, specifically in neural networks.

Deep learning models tend to suffer from internal covariate shifts, where the input data distribution to a layer is altered during training. This shift in data can result in slower training and failure to converge to the best solution. Normalization mitigates this issue by scaling and modifying the inputs of every layer so that they stay constant during training. This leads to quicker convergence, decreased hyperparameter sensitivity, and a more stable model.

What Is Batch Normalization?

Batch Normalization (BN) was proposed as a fix for the problem of internal covariate shift. Batch Normalization scales and shifts a layer's output by normalizing its activations using statistics (mean and variance) calculated over a mini-batch of data. In effect, batch normalization normalizes the activations of every layer in a neural network during training so that every layer is fed with inputs with a mean of zero and a standard deviation of one.

At training time, Batch Normalization computes the mean and variance of the activations within a mini-batch, normalizes the data, and applies a learnable scaling factor and bias. Normalization stabilizes training by eliminating the internal covariate shift.

Batch Normalization became very popular with the deep learning community due to its implications, which speed up training, decrease sensitivity towards the initial choice of weights, and, in certain cases, even facilitate the use of higher learning rates, resulting in overall better performance. It performs particularly well within convolutional neural networks (CNNs) as well as in other architectures when training speed becomes a critical consideration.

However, Batch Normalization does have limitations. Since it relies on mini-batch statistics, its performance can degrade when training on smaller batches or with highly variable batch sizes. It also has issues when working with tasks that require very high flexibility, such as recurrent neural networks (RNNs), or tasks with variable input sizes, such as natural language processing.

What Is Layer Normalization?

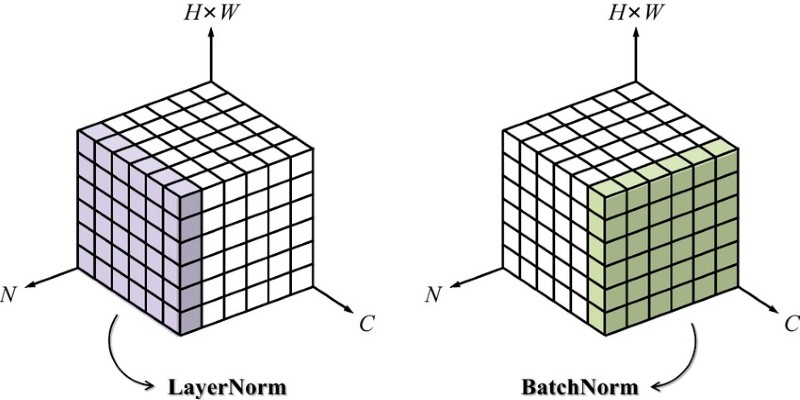

In contrast to batch normalization, Layer Normalization (LN) normalizes the activations across the entire input for each training example rather than across a mini-batch of data. This means that LN computes the mean and variance for each example, not the entire batch. It normalizes the activations of each layer on a per-example basis, which makes it particularly suitable for cases where batch sizes are small or where the model requires more flexibility in handling sequential data.

Layer Normalization is commonly used in recurrent neural networks (RNNs) and transformer architectures, where processing individual time steps independently is often preferred. Since it doesn’t rely on the mini-batch statistics, it doesn’t suffer from the issues that Batch Normalization faces with small batch sizes or variable input sizes.

Similar to Batch Normalization, Layer Normalization also applies a learnable scaling factor and bias after the normalization process. This helps preserve the model’s capacity to learn complex patterns without introducing undesirable biases in the normalized data.

Key Differences Between Layer Normalization and Batch Normalization

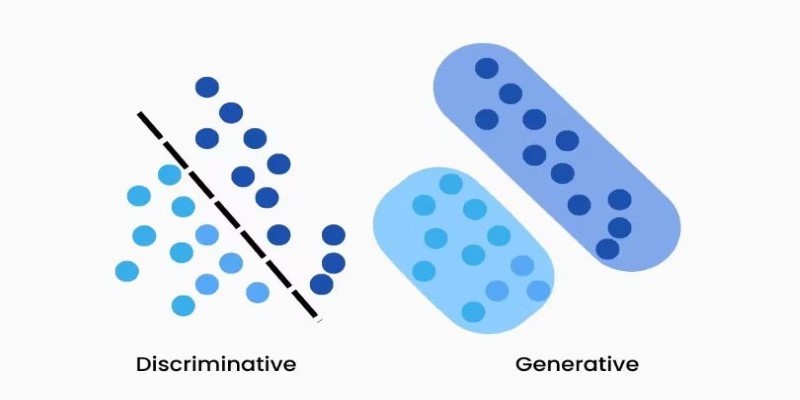

At their core, the key difference between Layer Normalization and Batch Normalization lies in how they compute the normalization statistics. Batch Normalization normalizes over a mini-batch, while Layer Normalization normalizes across each input.

Batch-Level vs. Sample-Level Normalization:

Batch Normalization relies on statistics computed over a mini-batch of data, which means that it uses multiple samples at once to calculate the mean and variance. On the other hand, Layer Normalization computes the mean and variance over all the units of a single layer per individual input. This distinction is critical when selecting the appropriate normalization technique based on the nature of your data and the model.

Sensitivity to Batch Size:

Batch Normalization can struggle with small batch sizes because the statistics it uses are less reliable. In cases with very small batches or single-sample processing, Batch Normalization might fail to provide meaningful results. Layer Normalization, however, works independently of batch size, making it more flexible in scenarios where batch sizes can be highly variable, such as in natural language processing tasks.

Use Cases:

Batch Normalization has proven extremely effective in convolutional neural networks (CNNs), where large batches and independent samples per batch are common. It is also widely used in computer vision and tasks with fixed input sizes.

In contrast, Layer Normalization is more suitable for tasks involving sequential data, like time series prediction or natural language processing, particularly in models like RNNs and transformers, where each sample is processed independently.

Training Speed and Efficiency:

Batch Normalization can accelerate training by stabilizing learning rates, but it requires additional computation to maintain the mini-batch statistics. On the other hand, Layer Normalization simplifies the process by focusing on each sample, which may lead to slightly slower training in some scenarios but is generally more stable and less dependent on the batch size.

Conclusion

Both Layer Normalization and Batch Normalization serve crucial roles in optimizing deep learning models, but their applications differ based on the task at hand. Batch Normalization is ideal for tasks with large, consistent batch sizes, particularly in CNNs. Layer Normalization, on the other hand, excels in sequence-based models like RNNs or NLP tasks, where flexibility and smaller batches are key. Understanding their differences allows you to choose the right technique for better training stability and efficiency.