Artificial intelligence has evolved significantly, with technologies like Generative AI and Large Language Models (LLMs) leading the way. While both share foundational principles, they differ in purpose and function. Generative AI focuses on creating new, original content, such as images and music, based on patterns from data. LLMs, however, are designed to understand and generate human language, making them ideal for tasks like chatbots, translation, and text analysis.

In this article, we'll explore the core differences between these two technologies, how they operate, and their unique applications across industries like healthcare, entertainment, and more.

What is Generative AI?

Generative AI refers to a subset of artificial intelligence that focuses on generating new data based on learned patterns from existing datasets. It uses various algorithms and models to create content that can be strikingly similar to the input data but also creative and unique. For instance, Generative AI can be used to produce artwork, write articles, or even create music based on the patterns it learns from analyzing large datasets.

The strength of Generative AI comes from its ability to recognize underlying patterns in data that it has been trained on and then apply them to generate new, original material. This is more than merely imitating the input—it can build something entirely new that also feels legitimate as if a human had done it. A great example of how Generative AI is applied is the production of AI-created artwork or blog posts written by AI systems.

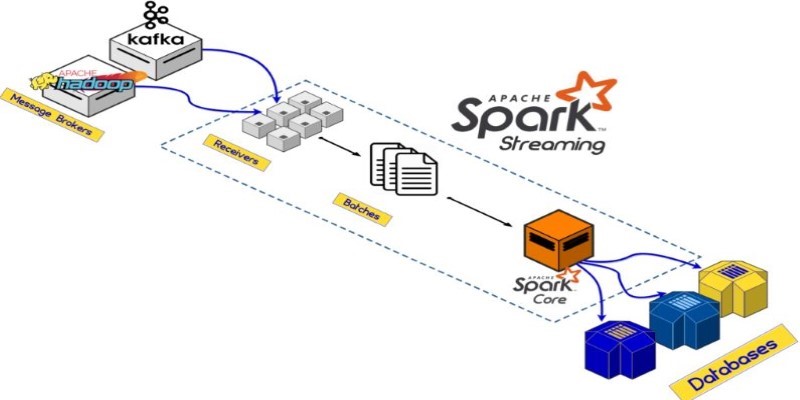

Generative AI algorithms, such as GANs (Generative Adversarial Networks), have two interconnected neural networks: one creates material, and the other validates the validity of the created content. With each iteration, both networks come closer to being excellent generators or discriminating content by improving themselves further. With Generative AI, therefore, realistic imagery, believable text, and even fresh new video material are possible to produce.

What Are Large Language Models?

On the other hand, Large Language Models (LLMs) are AI models designed to process and generate human language. These models, such as OpenAI's GPT-3 or Google's BERT, are trained on vast amounts of text data. They are designed to understand and predict language patterns, enabling them to generate coherent and contextually appropriate responses.

Large Language Models primarily focus on understanding natural language, enabling applications like chatbots, automatic translation, and content generation. Unlike Generative AI, which focuses on creating new content from scratch, LLMs are designed to process, interpret, and predict language in a way that mimics human conversation.

LLMs are based on deep learning architectures, specifically transformers, which allow them to learn complex relationships in large text datasets. These models can generate text that responds to a user's query, summarize long pieces of information, or even generate creative writing, though their focus remains primarily on language processing and understanding.

One key characteristic of LLMs is their ability to fine-tune and adapt based on the context in which they are deployed. For example, an LLM trained on a dataset of medical journals will generate responses relevant to healthcare queries. However, if the model is fine-tuned for customer service, it can generate appropriate responses in that context instead.

Key Differences Between Generative AI and Large Language Models

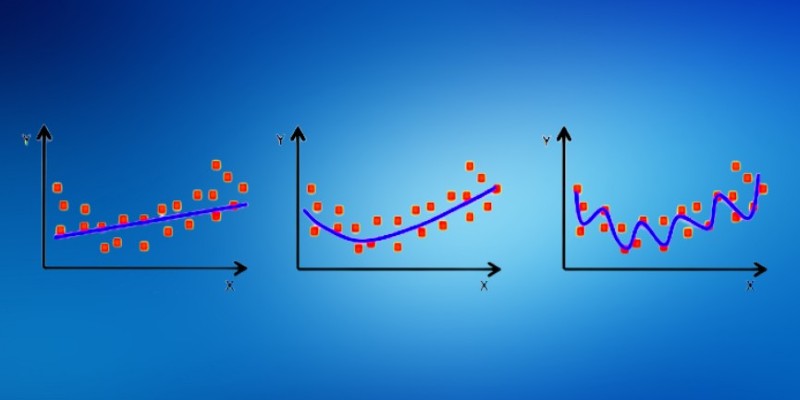

While both Generative AI and large language models use advanced machine learning techniques, they differ significantly in their applications and functionality.

Purpose and Output

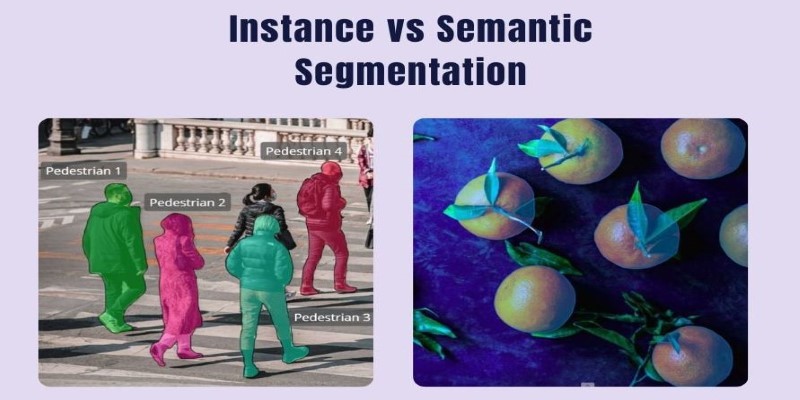

Generative AI is focused on creating new, original content, while large language models are focused on understanding and generating human language. Generative AI creates images, music, and even synthetic data, whereas LLMs generate responses to textual queries, interpret written content, and simulate human-like conversation.

Model Architecture

Generative AI models often utilize techniques like GANs or VAEs (Variational Autoencoders) to create new content. At the same time, LLMs are generally based on transformer architectures that excel at processing sequential data like text. This architectural difference is fundamental to their different functionalities.

Training Data

Generative AI models require diverse datasets that can include images, sounds, or even data from multiple modalities, while Large Language Models focus on vast amounts of text data. The variety in training data influences the types of outputs each model can produce.

Applications

Generative AI is primarily used in creative fields, such as art, music composition, and design, where novel content is essential. In contrast, Large Language Models are more commonly used in natural language processing applications, such as virtual assistants, chatbots, and automated translation services.

How Do These Technologies Impact Various Industries?

Both Generative AI and Large Language Models have far-reaching implications in a variety of industries.

In healthcare, Generative AI is being used to develop new drugs and medical treatments by generating synthetic biological data. Large Language Models, on the other hand, assist in processing and analyzing medical literature, providing clinicians with accurate information through natural language queries.

In the entertainment industry, Generative AI is already being used to create art and music, and it holds great potential for revolutionizing content creation by offering new ways of generating visual and auditory experiences. Meanwhile, LLMs are helping improve user experiences in streaming platforms by generating content recommendations and improving search functionalities.

In education, Generative AI can create customized learning materials, while LLMs can assist with language learning, tutoring, and even personalized feedback for students.

Conclusion

Both Generative AI and large language models are transformative technologies with distinct roles. Generative AI excels at creating new and original content, making it ideal for creative industries, while large language models specialize in understanding and generating human language, enhancing tasks like communication and text analysis. Although they serve different purposes, both technologies are shaping the future of AI, with the potential to revolutionize various sectors. As they continue to evolve, their integration and hybrid applications may lead to even more advanced and versatile systems that impact industries globally.