In the world of machine learning, understanding the differences between discriminative and generative models is crucial for anyone diving into the field. Both types of models serve as essential building blocks for artificial intelligence, but they approach data and learning in fundamentally different ways. While discriminative models focus on distinguishing between classes of data, generative models aim to understand the underlying distribution of the data itself.

This article will explain the core differences between these two model types, their advantages, and where each might be more applicable in real-world scenarios. By the end, you'll have a better idea of when and why you'd want to use one method over the other.

What is Discriminative?

Discriminative models are meant to differentiate between various classes of data. In other words, these models aim to learn the boundary between classes and hence are very good at classification tasks. A discriminative model, instead of modeling the data itself, models the conditional probability of a label from the data. For instance, in image classification, the model will learn to identify features that distinguish one class (e.g., cats) from another (e.g., dogs).

An important case of discriminative models is logistic regression, which operates by attempting to optimize for the probability of making accurate predictions. In this sense, discriminative models care about why data points belong in one class versus another without attempting to figure out the complete data distribution.

Pros of Discriminative

One of the strongest points in favor of discriminative models is their effectiveness in classification accuracy. As they concentrate on class boundaries, they prove to be more accurate than generative models when a task involves only classification. Discriminative models can support huge databases and function smoothly even with complicated data types.

Further, discriminative models usually need to learn with fewer data and generalize well. Since these models work on class distinguishing, they will usually perform better than generative models when ample labeled data are available. With supervised learning, where labeled data play a critical role, discriminative models can immediately gain good performance levels.

Cons of Discriminative

However, the focus on class boundaries also limits the flexibility of discriminative models. Since these models do not attempt to understand how the data is generated, they cannot generate new data points. This lack of generative ability can be a drawback in applications like data augmentation or generative tasks, where new data samples are required.

Moreover, discriminative models rely heavily on labeled data. In situations where labeled data is scarce or expensive to obtain, these models can perform poorly. They also tend to struggle with capturing the full variability of the data, as their primary goal is classification rather than understanding the data distribution.

What is Generative?

Generative models, on the other hand, take a broader approach. These models focus on learning the underlying distribution of the data, effectively modeling how data is generated. Rather than just distinguishing between classes, generative models learn to generate new samples from the same distribution as the data they are trained on. This makes them suitable for tasks that require the creation of new data points, such as image generation, text synthesis, or even predicting missing data.

A common example of a generative model is the Naive Bayes classifier, which works by estimating the joint probability of the features and the label. By modeling how data is generated, generative models can recreate data that is similar to the original input data, making them highly versatile.

Pros of Generative

Generative models offer significant advantages, primarily their ability to generate new data, making them essential in tasks like deepfake creation, synthetic data generation, and language translation. Unlike discriminative models, generative models can handle both labeled and unlabeled data, excelling in semi-supervised learning scenarios where labeled data is scarce.

By learning the underlying data distribution, generative models provide deeper insights into the data, enabling predictions that consider more than just observed data. This ability leads to better generalization, making them suitable for a wider range of applications beyond simple classification tasks.

Cons of Generative

While generative models are powerful, they come with their own set of challenges. First, they are generally more computationally intensive than discriminative models. Modeling the data distribution and learning how to generate new samples can require significant computational resources, especially for complex datasets like images or video.

Another downside is that generative models can be more difficult to train. They often require larger amounts of data and more sophisticated algorithms to effectively capture the underlying distributions. As a result, they may not always perform as well on simple classification tasks, where discriminative models excel.

Key Differences: Discriminative vs. Generative Model

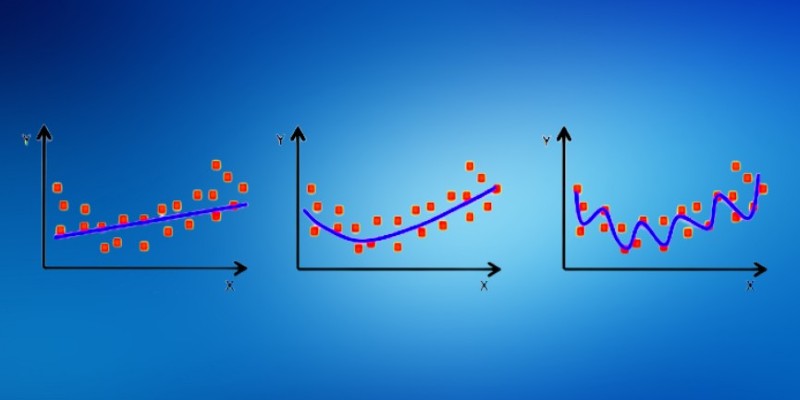

At their core, the key difference between discriminative and generative models lies in what they aim to learn. Discriminative models focus on learning the boundaries between classes, while generative models learn the distribution of data itself.

Discriminative models are generally more suited for classification tasks, where the goal is to predict the label of a data point based on its features. They excel when labeled data is abundant and computational resources are limited. In contrast, generative models shine in applications that require data generation or understanding of data distribution, such as image creation, text generation, and missing data imputation.

Another important distinction is that discriminative models tend to be more efficient in terms of accuracy for classification tasks. In contrast, generative models are more flexible and versatile but can be harder to train and require more computational power.

Conclusion

Discriminative and generative models each serve distinct purposes in machine learning. Discriminative models excel in classification tasks, focusing on separating classes accurately with abundant labeled data. Generative models, however, are more versatile, capable of generating new data and handling both labeled and unlabeled data. They offer valuable insights into data distribution and generalize well across various applications. The choice between the two depends on the task at hand, with each model offering unique strengths for different use cases.