Training a neural network that performs well in real-world scenarios isn't just about feeding it data—it's about ensuring it can make reliable predictions on unseen inputs. One of the biggest challenges is overfitting, where the model memorizes the training data instead of learning meaningful patterns. Regularization techniques are essential in tackling this issue, helping the model generalize better and perform consistently across different datasets. By applying these techniques, we can prevent the model from becoming too complex, reduce errors, and improve real-world accuracy.

This article discusses important regularization methods that enable neural networks to have the optimal level of complexity and generalization, resulting in more stable and effective models.

What Is Regularization in Neural Networks?

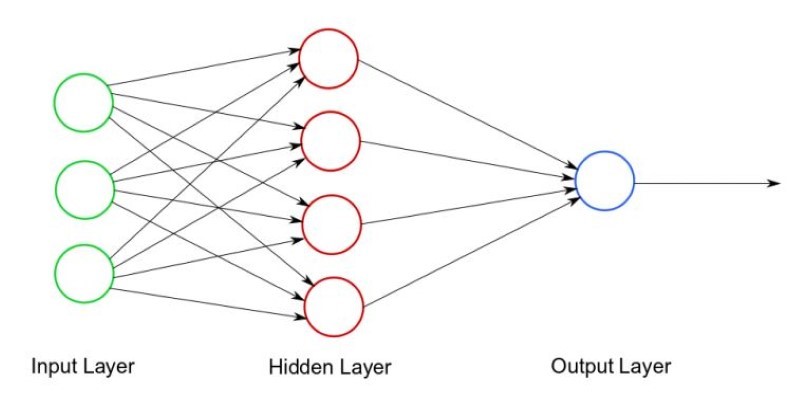

Regularization is a collection of methods applied to limit the complexity of a machine-learning model so that it does not learn irrelevant details that do not generalize. Neural networks, with their deep layers and huge numbers of parameters, are especially susceptible to overfitting. When a model overfits, it not only learns the actual patterns in the data but also random noise, making it useless when presented with new inputs.

Regularization assists by adding constraints or penalties that restrict overcomplexity. Such methods operate by altering the loss function of the model and including terms that dissuade very complex patterns. Consequently, the network learns to concentrate on significant features instead of memorizing training data. By directing the model towards simpler, more generalizable solutions, regularization guarantees improved performance on actual data.

Common Neural Network Regularization Techniques

Neural networks employ a variety of regularization strategies to manage complexity and enhance generalization. They all address capacity limitations in the model and learn useful patterns rather than memorizing training data but do so using different methods. Below, we discuss two of the most common regularization techniques employed in deep learning.

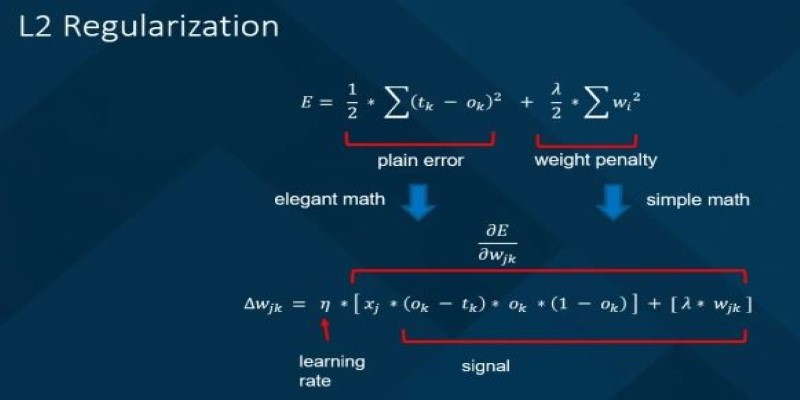

L2 Regularization (Ridge Regularization)

L2 regularization, otherwise referred to as weight decay, is a direct and efficient means of avoiding neural network overfitting. Including a penalty on the loss function based on the model's weights' squared values discourages large weights and encourages stable learning.

The technique ensures that the model converges to well-balanced weight distributions and reduces noise sensitivity in training data. L2 regularization prevents neural networks from paying attention to irrelevant patterns and results in better generalization while making predictions on new data.

L1 Regularization (Lasso Regularization)

L1 regularization, or Lasso regularization, works by adding a penalty proportional to the absolute values of the model’s weights. This method has the unique ability to drive some weights to exactly zero, effectively eliminating less important features and encouraging a sparse model.

L1 regularization is especially useful when performing feature selection, as it automatically helps the model focus on the most relevant input features. By reducing the number of active parameters, L1 regularization creates simpler, more interpretable models that are less likely to overfit and perform better on unseen data.

Dropout Regularization

Dropout is a regularization technique that works by randomly "dropping out" or deactivating a fraction of neurons during each training iteration. This forces the model to learn more robust features by preventing it from becoming reliant on specific neurons. During training, dropout creates a more diverse set of possible networks, making the model less prone to overfitting.

This technique is particularly beneficial in deep neural networks, where the complexity of the model can lead to overfitting. By introducing random noise, dropout encourages the model to generalize better and improve its performance on unseen data.

Early Stopping

Early stopping is a regularization strategy that halts the training process when the model's performance on the validation set starts to degrade. Although the model may continue improving on the training data, early stopping ensures that it doesn’t overfit by preventing it from learning noise or irrelevant patterns.

This technique monitors the validation loss and stops training as soon as it shows signs of worsening performance. By saving the model at its optimal point, early stopping helps preserve its ability to generalize well to new data while avoiding unnecessary complexity or overfitting.

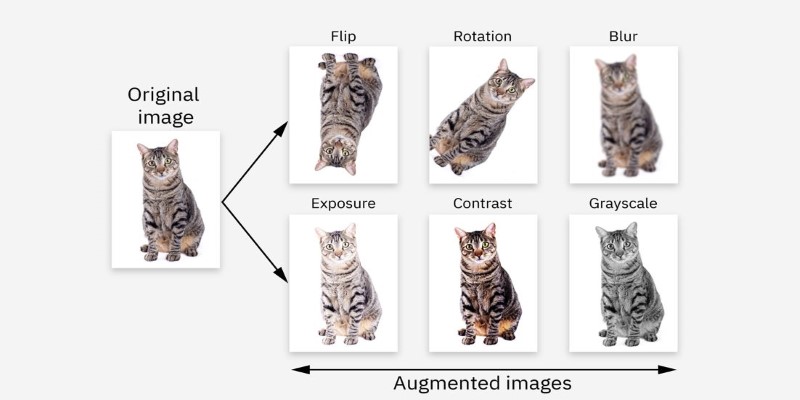

Data Augmentation

Data augmentation is a technique used to artificially expand the training dataset by applying various transformations to the existing data, such as rotations, flips, or color adjustments. This helps the model learn more generalized features rather than memorizing specific examples.

By exposing the neural network to a wider range of input variations, data augmentation prevents overfitting and improves the model's ability to handle new, unseen data. It is especially useful in image and text data, where small variations can occur naturally, making the model more robust and adaptable to real-world scenarios.

Batch Normalization

Batch normalization is a regularization technique that normalizes the inputs to each layer in the neural network, ensuring that they have a consistent distribution. This reduces internal covariate shift, where the distribution of layer inputs changes during training, making it harder for the network to learn effectively.

By stabilizing training, batch normalization allows the network to converge faster and makes it less sensitive to initialization and learning rates. It also acts as a form of regularization, reducing the need for other techniques like dropout. As a result, models trained with batch normalization are more stable and better generalized.

Conclusion

Neural network regularization techniques are essential for preventing overfitting and improving model generalization. Methods like L1 and L2 regularization, dropout, early stopping, and data augmentation help control model complexity and ensure it performs well on unseen data. By incorporating these techniques, models become more robust, adaptable, and less prone to memorizing irrelevant patterns. Choosing the right regularization strategy or combining several can significantly enhance the model's performance and reliability. Ultimately, mastering these techniques is crucial for building deep learning models that not only fit the training data but also generalize effectively to new, real-world examples.