As artificial intelligence continues to evolve, tech companies have raced to embed generative AI features into nearly every digital tool, from messaging apps and search engines to productivity software and social platforms. While AI promises convenience and innovation, there’s a growing concern that its widespread and sometimes unnecessary integration could lead to more frustration than benefit. From privacy risks to poor user experiences and environmental concerns, the inclusion of generative AI in every app is not always a welcome change.

Privacy Risks That Can't Be Ignored

A fundamental issue with generative AI integration in everyday apps is the potential compromise of user privacy. While standalone AI tools such as ChatGPT or Gemini can be cautiously used, embedding AI into personal tools like Google Docs, WhatsApp, or Messages complicates the ability to control data exposure.

Users often unknowingly share personal information with AI features built into these platforms. For instance, companies like OpenAI state they collect "Personal Information that is included in the input, file uploads, or feedback," and this data may be shared with third parties unless users opt out. Additionally, researchers have successfully extracted training data from language models like ChatGPT, further raising questions about user data safety.

Even when big tech companies claim they don’t share personal data, their track records suggest otherwise. History has shown that privacy policies can be vague or insufficiently enforced, which makes the universal adoption of AI in apps a cause for serious concern.

Annoying and Intrusive User Experiences

For many users, the forced integration of AI tools in apps feels less like an enhancement and more like an unwanted intrusion. Platforms such as WhatsApp now feature Meta AI buttons prominently in the search bar, often causing users to trigger it by accident. Suggestions like "Invent a new language" or "Imagine a tasty dessert" appear irrelevant to why someone opened a chat app in the first place.

Similarly, Google's Gemini AI has found its way into services like Gmail, Google Drive, and Google Messages. AI Overviews on Google Search also contribute to cluttered interfaces and unhelpful interruptions.

Rather than streamlining functionality, these intrusive features often make the user interface feel bloated and unfocused. When generative AI is presented as an over-prominent feature, it detracts from the user’s main intent and undermines the simplicity and usability of the app.

AI Isn’t Always the Best Tool for the Job

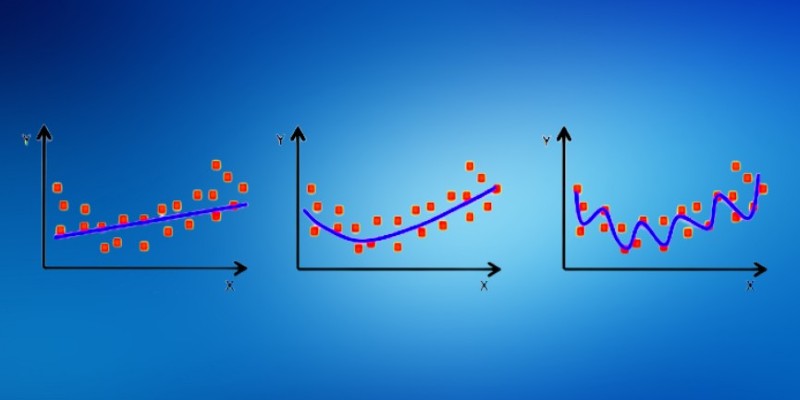

There are scenarios where generative AI adds real value, but that does not mean it should be default in every app. For example, AI might perform well in summarizing documents or generating text-based content, but it's less effective in contexts where human nuance, judgment, or emotional intelligence is required.

The problem intensifies when companies release underdeveloped AI features simply to capitalize on market trends. A notable example is Google’s AI Overviews, which once inaccurately suggested users should add glue to pizza. Such half-baked integrations damage trust and emphasize that not every app benefits from AI enhancements.

Instead of improving productivity or providing insightful support, generative AI sometimes delivers irrelevant or even harmful recommendations, ultimately failing the user.

Decline in Originality and Authenticity

Another concern is the erosion of authenticity in communication and creativity. Generative AI excels at drafting emails or generic responses, but its presence in social and creative apps diminishes the human touch. While using AI for a professional message might be acceptable, relying on it to comment on a friend’s post or send a heartfelt message feels disingenuous.

Moreover, in creative fields, AI-generated content has stirred debate. Artists, writers, and designers often argue that AI cannot match the thoughtfulness, originality, or context-awareness of human creativity. Embedding AI into every artistic or expressive tool could lead to homogenized content, where personal style and emotional resonance are replaced by algorithmic mimicry.

Environmental Cost of AI Overuse

Training and deploying large language models come with a substantial environmental footprint. OpenAI’s GPT-3, for example, reportedly consumed around 1,287 megawatt-hours of energy during training—enough to power an average U.S. household for over a century. A single AI query can consume nearly 10 times more energy than a traditional Google search.

These statistics become even more troubling when generative AI is embedded into apps where it adds little to no functional value. In such cases, the energy expended serves no meaningful purpose, contributing to increased carbon emissions without offering real user benefits.

Widespread integration of AI without necessity not only raises ethical questions but also contradicts global efforts toward sustainability.

The Loss of User Control and Simplicity

With every AI feature added to an app, the interface becomes more complex and user behavior becomes more monitored. What once were straightforward tools are now loaded with features users didn’t ask for, don’t use, or can’t easily disable.

This complexity impacts users who prefer minimal, distraction-free environments. For example, writers and professionals may find AI suggestions disruptive while drafting content. The more these features are baked into every corner of the application, the less control users have over their own workflows.

What makes matters worse is the limited ability to opt out. Often, users cannot disable these AI integrations entirely, forcing them to either tolerate them or seek alternative platforms.

Conclusion

There is no doubt that generative AI is powerful, innovative, and useful in many scenarios. Yet, its blanket integration into every app and service is problematic. From privacy risks and energy waste to UI clutter and a decline in human authenticity, the drawbacks are too significant to ignore.

Apps should give users the choice to use AI—not force it upon them. When implemented thoughtfully and with user consent, generative AI can be a powerful tool. But when it’s blindly embedded everywhere, it risks turning convenience into intrusion, and innovation into annoyance.

In an age of personalization and user empowerment, tech companies must listen more closely to what people actually want—and sometimes, what they want is an app without AI.