Artificial intelligence has taken creativity to the next level with models that generate images, music, and even human-like text. Among these, Variational Autoencoders (VAEs) and Generative Adversarial Networks (GANs) stand out as two of the most powerful tools in deep learning. However, while both are generative models, they function in very different ways.

VAEs take a structured, probabilistic approach, while GANs rely on an adversarial game between two networks. These differences impact everything from how they generate data to where they are used. If you've ever wondered how AI creates, this breakdown of VAEs and GANs will clear things up.

How VAEs and GANs Work?

Understanding the mechanisms behind VAEs and GANs helps in choosing the right model for specific AI applications.

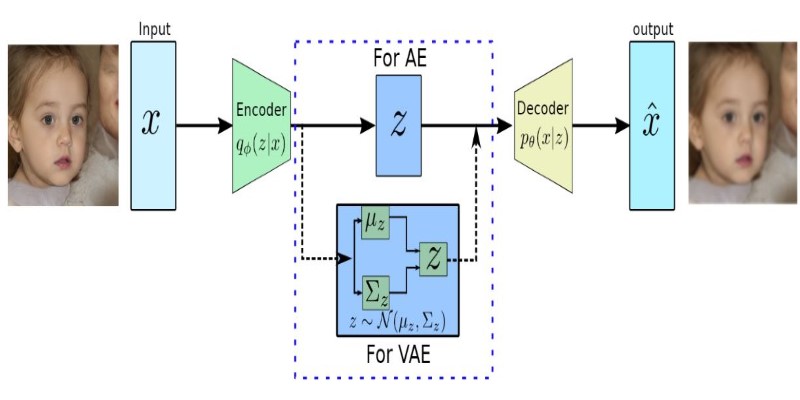

Variational Autoencoders (VAEs)

A Variational Autoencoder (VAE) is a deep model that compresses information into lower-dimensional latent representation and then recovers it in the form of slightly varied copies. VAEs are based on probabilistic inference, or they learn to approximate input data distribution instead of memorizing raw facts. Due to this, VAEs are mostly employed for situations that involve the generation of controlled and structured data.

The structure of a VAE comprises an encoder and a decoder. The encoder transforms the input data into a latent space representation where every point relates to a potential variation of the input. The decoder then reads the data out of this compressed state, guaranteeing that the outputs are not copies of the training data but meaningful variations thereof. VAEs introduce an element of randomness to the latent space, allowing them to produce smooth, diverse, and interpretable outputs.

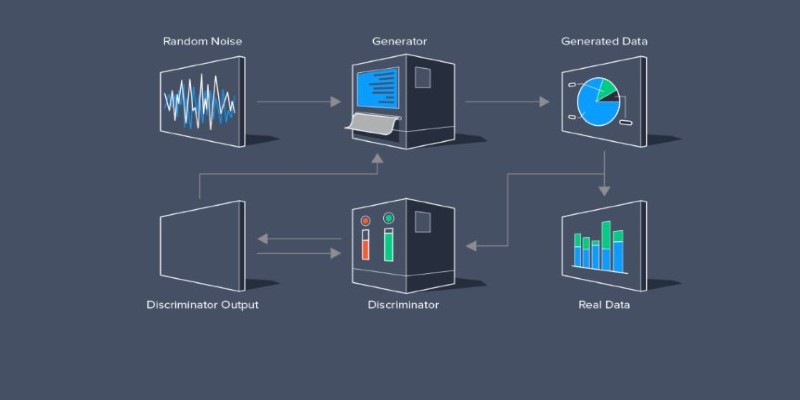

Generative Adversarial Networks (GANs)

A Generative Adversarial Network (GAN) is built on the concept of competition between two neural networks: a generator and a discriminator. The generator creates synthetic data samples, while the discriminator evaluates whether a given sample is real or fake. This adversarial process continues until the generator produces outputs that are so convincing that the discriminator can no longer distinguish them from real data.

GANs are particularly known for their ability to create high-quality, realistic images. The training process involves an ongoing battle between the generator and discriminator, where both networks improve over time. Unlike VAEs, GANs do not rely on probabilistic distributions, which allows them to create sharper and more detailed outputs. However, this also means GANs lack the structured latent space that VAEs provide, making them harder to control in certain applications.

Key Differences Between VAEs and GANs

While VAEs and GANs belong to the generative model family, they differ significantly in how they create, refine, and optimize data outputs.

Data Generation Approach

One of the biggest differences between VAEs and GANs lies in how they generate data. VAEs use a structured, probabilistic method to model distributions, ensuring controlled and interpretable variations in outputs. GANs, on the other hand, employ an adversarial training system where two neural networks compete to improve data realism. This contrast affects the quality, realism, and level of control over generated content.

Output Quality and Realism

GANs typically produce sharper and more visually realistic images than VAEs. The adversarial nature of GAN training forces the generator to refine its outputs continuously, creating data that closely resembles real-world samples. However, GANs can suffer from mode collapse, where the model generates only a limited range of variations instead of diverse outputs.

VAEs, in contrast, generate more structured and interpretable data. Their reliance on latent space distributions allows for predictable variations, making them ideal for applications like 3D object modeling, speech synthesis, and text generation, where smooth transitions between generated samples are crucial.

Training Complexity

GANs are difficult to optimize because training requires a delicate balance between the generator and discriminator. If one network becomes too dominant, the model may fail to train properly, making GAN training unstable and computationally expensive.

VAEs, by contrast, follow a more stable and straightforward training process. They minimize a well-defined loss function, making optimization easier and more predictable compared to the adversarial setup of GANs. As a result, VAEs are often favored in applications that require structured, controlled generation rather than ultra-realistic outputs.

Real-World Applications of VAEs and GANs

Both VAEs and GANs have found applications across multiple industries, each excelling in different areas.

GANs are widely used to create ultra-realistic images in image generation. They power applications such as deepfake technology, AI-generated portraits, and art generation. Companies like NVIDIA have leveraged GANs to develop AI-driven image enhancement and video frame interpolation tools.

Due to their structured nature, VAEs are commonly used in data compression and interpolation. For instance, they help reduce noise in images and videos while preserving meaningful details. Additionally, VAEs are applied in the medical field for MRI and CT scan analysis, where generating realistic yet controlled variations of medical images aids in diagnosis and research.

Another important area where these models differ is text generation. GANs have been used in natural language processing to create realistic AI-generated stories, while VAEs play a role in controlled text synthesis and machine translation. Since VAEs map text into an interpretable latent space, they are useful for generating language models with specific constraints.

In the gaming industry, GANs create high-resolution textures and realistic character models, while VAEs assist in level design and procedural content generation, ensuring smooth transitions between different game environments.

Conclusion

VAEs and GANs are two powerful generative models with distinct strengths. VAEs offer structured, controlled data generation, making them ideal for applications requiring smooth variations. GANs, on the other hand, create highly realistic outputs through adversarial training, excelling in image generation and creative AI tasks. While GANs produce sharper images, they require complex tuning, whereas VAEs are easier to train and interpret. The choice between them depends on the need for realism versus control. As AI evolves, hybrid models are emerging, blending the best of both. Understanding these differences helps in selecting the right model for specific applications.